Gift this article

In this Article

Follow

Follow

Follow

Follow

Follow

Have a confidential tip for our reporters? Get in TouchBefore it’s here, it’s on the Bloomberg Terminal LEARN MORE

By Ian King

18 tháng 9, 2024 at 05:34 GMT+7

Save

The blistering stock rally that’s made Nvidia Corp. one of the world’s three most valuable companies is based on a number of assumptions. One is that artificial intelligence applications made possible by its semiconductors will become features of the modern economy. Another is that Nvidia, and the patchwork of subcontractors upon which it relies, can meet the exploding demand for its equipment without a hitch.

The excitement surrounding artificial intelligence has ebbed and flowed, but Nvidia remains the preeminent picks-and-shovels seller in an AI gold rush. Revenue is still soaring, and the order book for the company’s Hopper chip lineup and its successor — Blackwell — is bulging.

However, its continued success hinges on whether Microsoft Corp., Google and other tech firms will find enough commercial uses for AI to turn a profit on their massive investments in Nvidia chips. Meanwhile, antitrust authorities have zeroed in on Nvidia’s market dominance and are looking into whether it has been making it harder for customers to switch to other suppliers, Bloomberg has reported.

Nvidia shares suffered their worst one-day decline in four weeks on Aug. 29 after it issued an underwhelming sales forecast. The company also confirmed earlier reports that Blackwell designs had required changes to make them easier to manufacture.

The stock has since bounced back from its August low. And the company’s revenue will more than double this year, according to estimates, repeating a similar surge in 2023. Barring a currently unforeseen share collapse, Nvidia will end the year as the world’s most valuable chipmaker, by a wide margin.

Here’s what’s driving the company’s spectacular growth, and more on the challenges that lie ahead.

What are Nvidia’s most popular AI chips?

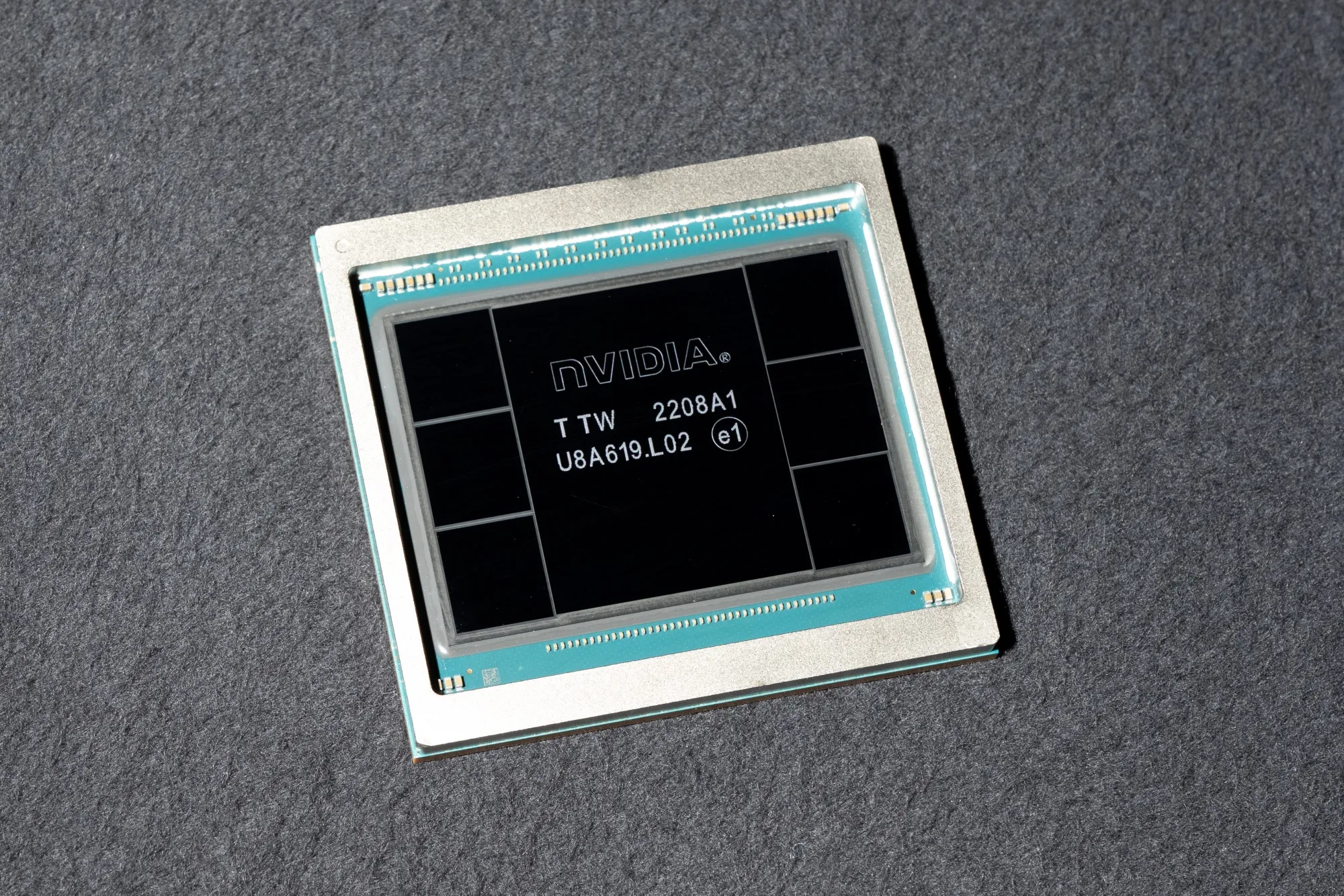

The current moneymaker is the Hopper H100, whose name is a nod to computer science pioneer Grace Hopper. It’s a beefier version of a graphics processing unit that normally lives in personal computers and helps video gamers get the most realistic visual experience. But it’s being replaced at the top of the lineup with the Blackwell range (named for mathematician David Blackwell).

Both Hopper and Blackwell include technology that turns clusters of Nvidia chips into single units that can process vast volumes of data and make computations at high speeds. That makes them a perfect fit for the power-intensive task of training the neural networks that underpin the latest generation of AI products.

The company, founded in 1993, pioneered this market with investments dating back almost two decades, when it bet that the ability to do work in parallel would one day make its chips valuable in applications outside of gaming.

The Santa Clara, California-based company will sell the Blackwell in a variety of options, including as part of the GB200 superchip, which combines two Blackwell GPUs, or graphics processing units, with one Grace CPU, a general-purpose central processing unit.

Why are Nvidia’s AI chips so special?

So-called generative AI platforms learn tasks such as translating text, summarizing reports and synthesizing images by ingesting vast quantities of preexisting material. The more they see, the better they become at things like recognizing human speech or writing job cover letters. They develop through trial and error, making billions of attempts to achieve proficiency and sucking up huge amounts of computing power along the way.

Blackwell delivers 2.5 times Hopper’s performance in training AI, according to Nvidia. The new design has so many transistors — the tiny switches that give semiconductors their ability to process information — that it’s too big for conventional production techniques. It’s actually two chips married to each other through a connection that ensures they act seamlessly as one, the company said.

For customers racing to train their AI platforms to perform new tasks, the performance edge offered by the Hopper and Blackwell chips is critical. The components are seen as so key to developing AI that the US government has restricted their sale to China.

How did Nvidia become a leader in AI?

The Santa Clara, California-based company was already the king of graphics chips, the bits of a computer that generate the images you see on the screen. The most powerful of those are built with thousands of processing cores that perform multiple simultaneous threads of computation, modeling complex 3D renderings like shadows and reflections.

Nvidia’s engineers realized in the early 2000s that they could retool these graphics accelerators for other applications. AI researchers, meanwhile, discovered that their work could finally be made practical by using this type of chip.

What are Nvidia’s competitors doing?

Nvidia now controls about 90% of the market for data center GPUs, according to market research firm IDC. Dominant cloud computing providers such as Amazon.com Inc.’s AWS, Alphabet Inc.’s Google Cloud and Microsoft’s Azure are trying to develop their own chips, as are Nvidia rivals Advanced Micro Devices Inc. and Intel Corp.

Those efforts have done little to erode Nvidia’s dominance for now. AMD is forecasting as much as $4.5 billion in AI accelerator-related sales for this year. That’s a remarkable growth spurt from next to nothing in 2023, but still pales in comparison with the more than $100 billion Nvidia will make in data center sales this year, according to analyst estimates.

How does Nvidia stay ahead of its competitors?

Nvidia has updated its offerings, including software to support the hardware, at a pace that no other firm has yet been able to match. The company has also devised various cluster systems that help its customers buy H100s in bulk and deploy them quickly. Chips like Intel’s Xeon processors are capable of more complex data crunching, but they have fewer cores and are slower at working through the mountains of information typically used to train AI software. The once-dominant provider of data center components has struggled so far to offer accelerators that customers are prepared to choose over Nvidia fare.

How is AI chip demand holding up?

Nvidia Chief Executive Officer Jensen Huang has said customers are frustrated they can’t get enough chips. “The demand on it is so great, and everyone wants to be first and everyone wants to be most,” he said at a Goldman Sachs Group Inc. technology conference in San Francisco on Sept. 11. “We probably have more emotional customers today. Deservedly so. It’s tense. We’re trying to do the best we can.”

Demand for current products is strong and orders for the new Blackwell range are flowing in as supply improves, Huang said. Asked whether the massive AI spending is providing customers with a return on investment, he said companies have no choice other than to embrace “accelerated computing.”

Why is Nvidia being investigated?

Nvidia’s growing dominance has become a concern for industry regulators. The US Justice Department sent subpoenas to Nvidia and other companies as it seeks evidence that the chipmaker violated antitrust laws, escalating an existing investigation, Bloomberg reported on Sept. 3. Nvidia subsequently denied that it had a subpoena, but the DOJ often sends requests for information in the form of what’s known as a civil investigative demand, which is commonly referred to as a subpoena. The Department of Justice has sent such a request seeking information about Nvidia’s acquisition of RunAI and aspects of its chip business, according to one person with direct knowledge of the matter. Nvidia has said its dominance of the AI accelerator market comes from the superiority of its products and that customers are free to choose.

How do AMD and Intel compare with Nvidia in AI chips?

AMD, the second-largest maker of computer graphics chips, unveiled a version of its Instinct line last year aimed at the market that Nvidia’s products dominate. At the Computex show in Taiwan in early June, AMD CEO Lisa Su announced that an updated version of its MI300 AI processor would go on sale in the fourth quarter and outlined that further products will follow in 2025 and 2026, showing her company’s commitment to this business area. AMD and Intel, which is also designing chips geared for AI workloads, have said that their latest products compare favorably to the H100 and even its H200 successor in some scenarios.

But none of Nvidia’s rivals has yet accounted for the leap forward that the company says Blackwell will deliver. Nvidia’s advantage isn’t just in the performance of its hardware. The company invented something called CUDA, a language for its graphics chips that allows them to be programmed for the type of work that underpins AI applications. Widespread use of that software tool has helped keep the industry tied to Nvidia’s hardware.

What is Nvidia planning on releasing next?

The most anticipated release is the Blackwell line, and Nvidia has said it expects to get “a lot” of revenue from the new product series this year. However, the company has hit engineering snags in its development that will slow the release of some products.

Meanwhile, demand for the H series hardware continues to grow. Huang has acted as an ambassador for the technology and sought to entice governments, as well as private enterprise, to buy early or risk being left behind by those who embrace AI. Nvidia also knows that once customers choose its technology for their generative AI projects, it will have a much easier time selling them upgrades than competitors.

The Reference Shelf

- Nvidia is minting millionaires too busy to bask in their new wealth.

- More on how Nvidia competitor AMD is trying to fight back.

- How the US is trying to keep AI chips out of China.

- Britain’s Alan Turing Institute considers the ethics and safety of AI.

- Bloomberg Businessweek probes the back story of Jensen Huang’s big bet on AI.

- Fill any gaps in your knowledge with our glossary of AI terms to know.

— With assistance from Jane Lanhee Lee and Vlad Savov